RESEARCH

Automated driving and Chat GPT. Deep learning and generative AI are transforming and enriching our lives. The technical challenge of such AI technology is power consumption. AI consumes a lot of power because of the large number of multiply-and-accumulate operations required to process large amounts of data. The power consumption of servers for AI processing is increasing exponentially.

To solve the power problem caused by AI, we need next-generation integrated circuits (Beyond IC) specialized for the AI era, with performance beyond what an extension of conventional ICs can offer. We are researching wired-logic processors and 3D integration technologies specialized for AI processing which will achieve power reductions of three orders of magnitude or more. We aim to contribute to the realization of a sustainable, progressive, and prosperous society that utilizes AI everywhere.

THEME 01AI Processors

Current AI technology is based on neural networks which are created to mimic the neural circuits of the human cerebrum.

An artificial neural network mimics the signal transmission of elements called neurons through a large number of synapses in a biological neural network to perform a large number of repetitive multiply-and-accumulate operations on very large and sparse matrices. Although power reduction can be achieved by utilizing GPUs which excel at parallel processing, access to memory, which stores intermediate computation and training results, becomes the new bottleneck.

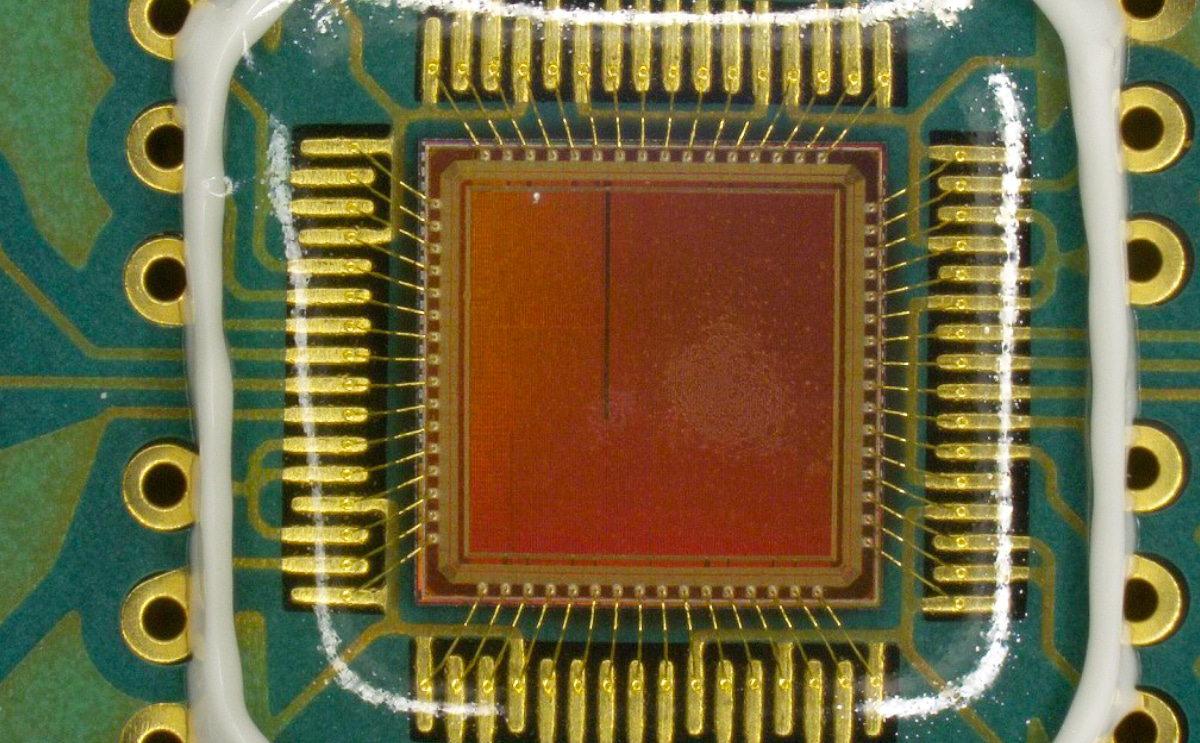

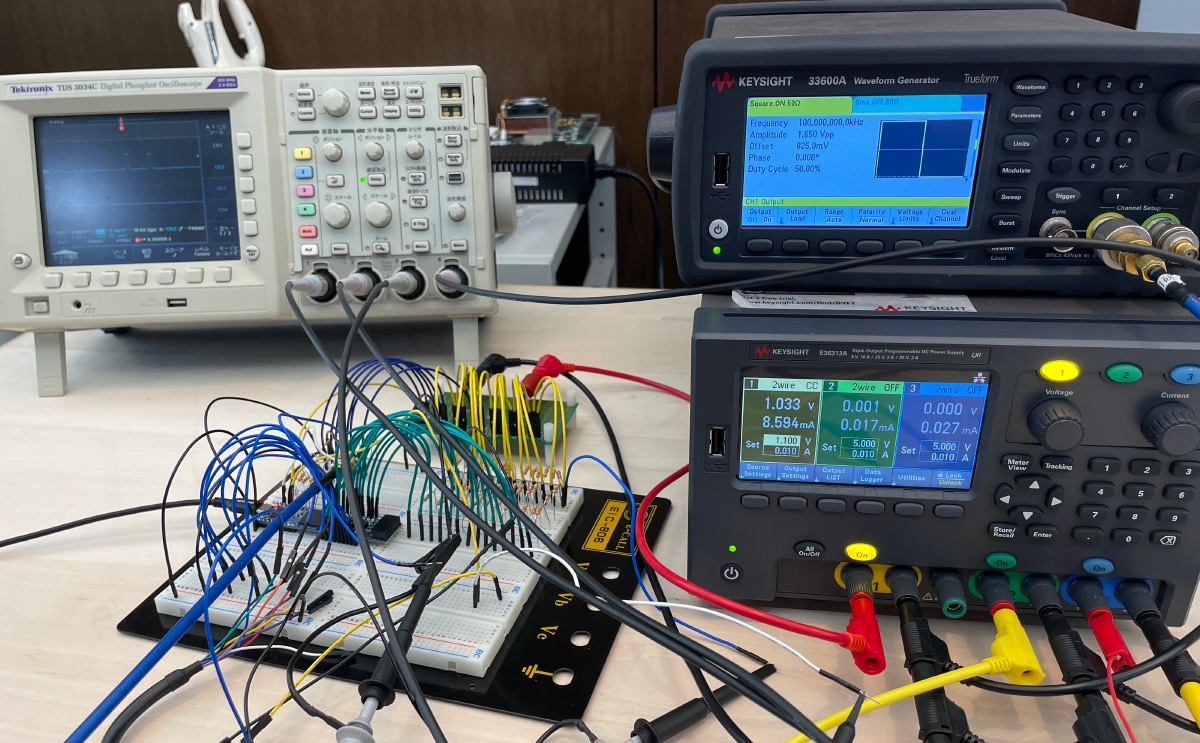

At Kosuge Lab, we focus not only on the circuits themselves but also on the neural network models to be implemented. We are studying and developing highly power-efficient AI processors through the co-design of neural networks and circuits.

Through discussions with neuroscience researchers at MIT and the University of Tokyo, we are developing a “neuro-inspired” low-power AI processor that mimics the characteristics of the cerebrum. Our goal is to realize an implantable AI processor that consumes 1/1000 of the power consumption of conventional processors.

最近の研究成果:

[1] A. Kosuge, Y. C. Hsu, R. Sumikawa,T. Ishikawa, M. Hamada, and T. Kuroda, "A 10.7-μJ/frame 88% Accuracy CIFAR-10 Single-chip Neuromorphic FPGA Processor Featuring Various Nonlinear Functions of Dendrites in Human Cerebrum," in IEEE Micro, vol. 43, no. 6, pp. 19-27, Nov.-Dec. 2023.

[2] A. Kosuge, R. Sumikawa, Y. -C. Hsu, K. Shiba, M. Hamada, T. Kuroda, "A 183.4nJ/inference 152.8uW Single-Chip Fully Synthesizable Wired-Logic DNN Processor for Always-On 35 Voice Commands Recognition Application," in IEEE Symposium on VLSI Circuits, June 2023.

[3] D. Li et al., "Efficient FPGA Resource Utilization in Wired-Logic Processors Using Coarse and Fine Segmentation of LUTs for Non-Linear Functions," in IEEE International Symposium on Circuits and Systems (ISCAS), May, 2024 (To be presented).

最近の報道:

MIT Technology Review Japan : 35歳未満の発明家

https://www.technologyreview.jp/l/innovators_jp/261818/Atsutake-Kosuge/

THEME 023D Chip Integration

Chat GPT and other generative AI technologies are being developed using extremely large amounts of data to extract, through learning, a myriad of knowledge disseminated via YouTube and the Internet. Like the neural network of the cerebrum, knowledge is stored in artificial neural networks as weight coefficients of synapses connecting neurons. These weight coefficients can amount to several terabytes of data.

Since today’s semiconductor technology has a limited memory capacity, such a large amount of data cannot be processed by a single processor and memory. Current AI technology requires a supercomputer with hundreds of tightly connected processors. As the number of processors increases, the communication distance between processors and hence power consumption increases rapidly.

Since larger neural networks offer better AI performance, we expect the required memory capacity to continue to increase exponentially, resulting in rapid rise in power consumption.

The memory that stores AI models and the processor that performs large amounts of calculations using these models are all consisted of semiconductor integrated circuits. These semiconductor integrated circuits are composed of elements called transistors, which have been miniaturized every two years to dramatically increase memory capacity and processor performance in the last 50 years. However, transistor size is approaching the size of an atom, and further miniaturization is becoming more and more difficult. A new integrated circuit paradigm is needed to expand scale without relying on miniaturization.

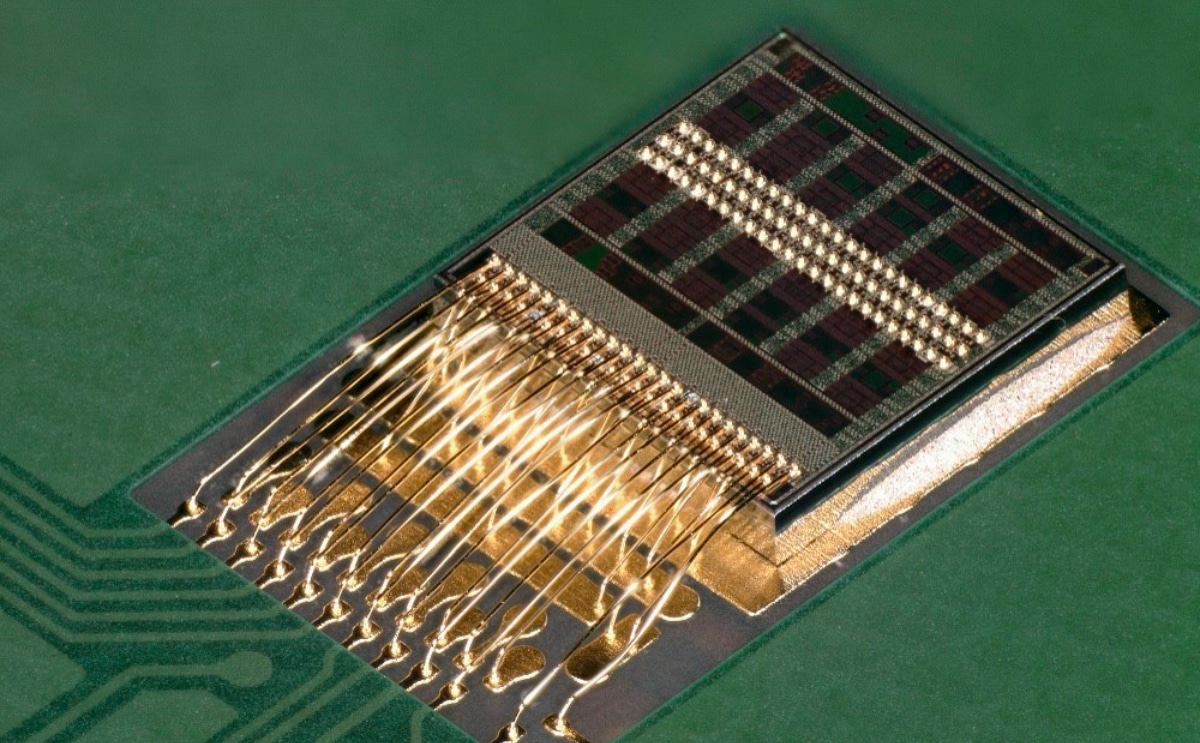

At Kosuge Lab, we are researching 3D chip integration technology. We are creating a cube composed of multiple memory and processor chips stacked on top of each other. We are implementing a large amount of high-density, low-power signal wiring in this cube to achieve wide memory bandwidth with low access latency.

AI models that previously required multiple supercomputers can now be realized in one palm-sized package, significantly reducing power consumption. Furthermore, it can be implemented physically anywhere without any restrictions, and we believe that this will allow us to realize advanced intelligence in cars and robots.

最近の研究成果:

[1] K. Shiba, M. Okada, A. Kosuge, M. Hamada and T. Kuroda, "A 12.8-Gb/s 0.5-pJ/b Encoding-less Inductive Coupling Interface Achieving 111-GB/s/W 3D-Stacked SRAM in 7-nm FinFET," in IEEE Solid-State Circuits Letters, vol. 6, pp. 65-68, March 2023.

[2] K. Shiba, M. Okada, A. Kosuge, M. Hamada, and T. Kuroda, "A 7-nm FinFET 1.2-TB/s/mm^2 3D-Stacked SRAM Module with 0.7-pJ/b Inductive Coupling Interface Using Over-SRAM Coil and Manchester-encoded Synchronous Transceiver," in IEEE Journal of Solid-State Circuits, vol. 58, no. 7, pp. 2075-2086, July 2023 vol. 58, no. 7, pp. 2075-2086, July 2023.

[3] A. Kosuge and T. Kuroda, "[Invited] Proximity Wireless Communication Technologies: An Overview and Design Guidelines," in IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 69, no. 11, pp. 4317-4330, Nov. 2022.